Comparing GPT-4 and Ollama-3

Objective

Compare GPT-4 and Ollama-3 through the narrow lens of a single test - extraction of skills from a given resume.

Background

Both GPT-4 (from OpenAI) and Ollama-3 (from Meta) are LLM's that can read text and understand it. How well do the models perform in extracting skills from a given resume? In this nugget you will learn how to use both models to perform the text - and then you get to choose which one did better.

Bear in mind that GPT-4 has 1 trillion parameters, while Ollama-3 has a few billion. And GPT-4 is "pay as you go" while Ollama-3 is free. So apples and oranges. But the question here is - do you need an apple, or an orange?

Prerequisites

- An account on openapi.com. Read the docs.

- Ollama on mac (or anywhere, but here we do mac). Read the docs and install it.

- A sample resume.

Steps

Step 1 - Setup Ollama

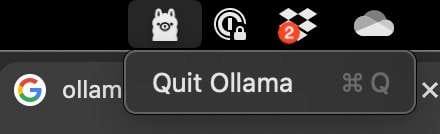

Let's start with Ollama. After it is installed, you should see the Ollama icon in the status. If you see it, it means Ollama is running on port 11434 on your localhost.

You can verify it by running the following command:

netstat -anvp tcp | awk 'NR<3 || /LISTEN/'

Now you are ready to use it. Create the client.

client = OpenAI(

api_key="ollama",

base_url="http://localhost:11434/v1"

)

And then send the request to the Ollama server listening on localhost:11434.

response = client.completions.create(

model="llama3.2",

prompt=prompt,

stream=False,

temperature=0.3

)

skills = response.choices[0].text.strip()

Here prompt is your input which contains the contents of the resume and a prompt question. skills is what Ollama returns to you. Writing the full python program is left as an exercise. Once that is done, you will have a secret API key in your account settings. Copy and store it safely.

Step 2 - Setup GPT-4

GPT-4 is even easier since there is nothing to install; but you do need to create an account and provide your credit card as mentioned before. And this is all the code you need:

openai.api_key = "sk-proj-your-secret-key"

response = openai.chat.completions.create(

model="gpt-4", # Or "gpt-3.5-turbo" for faster and cheaper responses

messages=messages,

temperature=0.3

)

skills = response.choices[0].message.content.strip()

The messages parameter looks a bit like this:

messages = [

{"role": "system", "content": "You are a helpful assistant that finds resume skills."},

{"role": "user", "content": f"Resume Skills: {resume_skills}"}

]

As before skills contains the response from GPT-4. As before, it is left to you to write the complete program.

Test

Here is the output of the extracted skills when the same resume is processed by both the models.

Ollama-3

Resume skills: Python, Go, Java, Docker, Kubernetes (K8s), gRPC, Kafka, RabbitMQ, PostgreSQL, MySQL, Redshift, BigQuery, Airflow, Git, DynamoDB, REST API, CI/CD (TF & Ansible)

GPT-4

Resume skills: Analytics, Real-time event ingestion, Python 3, arm64 architecture, Ubuntu 22.04 OS, Airflow, NFT Marketplace development, Go, AWS K8s, Microservices, OpenStack, Python 2.7, Python 3.6, CI/CD, Docker, PostgreSQL, RabbitMQ, Enterprise Storage SAN, VMware vCenter, vSphere, Python scripting, PowerShell scripting, gRPC, Kafka, Git, Storage SAN, Redshift, BigQuery, MySQL, REST API, CMS, DynamoDB, Data Engineering, Java, Ansible

At least, on this one singular test, GPT-4 is able to extract a lot more skills than Ollama-3.

Dig Deeper

GPT-4 has more parameters than Ollama-3. What does that really mean? And do more parameter means a better performance? Always? Sometimes?